More Big Data: What Has Truth Got To Do With It?

There is a debate in the Big Data world that hinges on the old conundrum – how can we know what we don’t know? It sounds a bit trite at first blush, but in fact, it is a very hard problem. When faced with massive data sets, which our guts tell us house veins of brilliance, how do we find the nuggets of gold? Do we begin by asking questions and hoping that the data supports our theories? Or might our framing questions constrict our inquiry, causing us to simply miss the new discoveries?

Ontologies from the Bottom Up

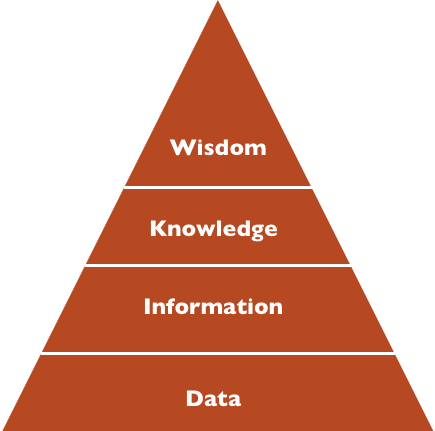

In the world of information sciences, there is a well-known model called the DIKW Hierarchy. This pyramid represents data, information, knowledge and wisdom. Big Data sits at the base of this pyramid. The way we organize the information and knowledge levels in this pyramid is the work of ontologies. Ontologies are formal representations of concepts that are linked through relationships and contain elements such as individuals, attributes, relationships, functions, etc. These ontologies underlie everything from complex information systems like artificial intelligence to everyday digital experiences such as shopping on Amazon.

But ontologies are not born, they are made. We begin with a theory – and from there we build an ontology to test the theory on the data. At least, that is the way it has typically happened. With massive data sets, some researchers are starting to think that we need to develop a slightly looser way of dealing with ontologies. Jeff Jonas, Chief Scientist, Entity Analytic Solutions at the IBM Software Group, commented in a report from the Aspen Institute, that huge datasets may help us “find and see dynamically changing ontologies without having to try to prescribe them in advance. Taxonomies and ontologies are things that you might discover by observation, and watch evolve over time.”

While that sounds very cool, in practice it is really hard to do. John Clippinger from Harvard suggested in the same report that the way forward might be constructing a series of “self-organizing grammars” that could be flexible enough to allow relationships between data elements to find and organize themselves. The Economist reported this week on a project by David Blei of Princeton and John Lafferty of Carnegie Mellon that is starting to do just that. Their project focuses on the issue of automated tagging of data. In their model, the user selects the number of topics he or she wants to extract from the data. The system creates a “bin” for each topic and starts sorting. First it trashes common words and then assigns the rest randomly to the bins. It then looks for pairs of words in each bin that occur more often than would be randomly expected and joins those that pass the test into permanent pairs. Words that don’t find a mate are dropped into other bins and the process is repeated until all possible pairs are found. If the process is then run on the pairs of words, networks of meaning begin to emerge within a single bin.

An approach like this starts to get exciting when the method is applied not just to topic ideas in sets of academic papers, but to the evolution of ideas themselves. Dr. Blei is now using the process to track how topics evolve and change over time. Ultimately, a system such as this could begin to track the influential elements – in papers, blogs, news articles – that lead to shifts in the ways that we think about things.

Truth Isn’t Part of the Business Model

The big advantage of bottom up approaches is we don’t impose as much of our thinking on the data. We set the initial conditions that allow things to emerge and we watch things happen. This is very useful when working with very large data sets where it becomes almost impossible not to sort before you analyze, making decisions about “outliers” and other seemingly irrelevant elements. The problem is that data gets dirty as soon as we touch it. And the ways in which it gets dirty inevitably direct the outcomes we derive from it. This makes the notion of “truth” a moving target. As Jeff Jonas of IBM commented, “There is no such thing as a single version of truth. And as you assemble and correlate data, you have to let new observations change your mind about earlier assertions.”

In some contexts, truth matters more than in others. When the context is public health, it matters quite a lot. On the other hand, if the context is a recommendation system that correlates information to suggest to you other items to buy, it might not matter all that much. What matters in that case is that people buy more stuff. Steven Baker of Business Week commented, “Truth could be just be something that we deal with in our spare time because it’s not really part of the business model.”

The notion that truth becomes extraneous is troubling. Big Data is a product of our actions. But it is housed, mined and reconfigured by companies and organizations whose interests are often quite different than our own. Beyond that, Big Data is increasingly analyzed, packaged, and sold back to us in the form of products or policies that shape our lives and the public dialogue on issues and social mores. How these products and policies are arrived at is often controversial and obscure, even to the initiated. If truth isn’t really part of the business model, then what happens to us, the consumers and producers of Big Data, who might want some insight into the various layers of truth in the world around us? The key question going forward is whether Big Data is fundamentally a private asset or a public good. The answer will undoubtedly be “both”. How we shape transparency around the intended uses and outcomes of Big Data is a critical public conversation.